Collect IIS Log using Filebeat, Logstash, Elastic and Kibana

We will collect IIS Logs by using Filebeat, Logstash, Elastic and Kibana (ELK stack). We can collect from many log paths in the same window server.

- Day 1. What is ELK stack?

- 1. Problem we need to solve

- 2. What is ELK stack

- 3. Architecture of ELK stack

- 4. Summary

- Day 2. Setup & configure ElasticSearch and Kibana on Linux Ubuntu

- Step 1: Update & Install Dependencies

- Step 2: Install Elasticsearch on Ubuntu

- Step 3: Install & run Kibana on Ubuntu

- Day 3. Setup & configure LogStash on Linux Ubuntu

- Step 1: Install Logstash on Ubuntu

- Step 2: Configure Logstash

- Day 4. Setup & configure Filebeat on Window

Day 1. What is ELK stack?

1. Problem we need to solve

I have a big website and I have to deploy it into 5 servers (nodes) using a load balancer. In my project, I’m using Nlog to help create and write logs into a file when this website throws an exception. But log files will be stored on all servers, so it is really difficult to check for new exceptions. I have to check one by one, wasting a lot of time.

2. What is ELK stack

ELK stack provides centralized logging in order to identify problems with servers or applications. It allows you to search all the logs in a single place. It also helps to find issues in multiple servers by connecting logs during a specific time frame.

ELK stands for Elastic, Logstash and Kibana. In that:

- Elastic is a search engine, that helps store data.

- Logstash is a filler engine, it helps collect, process, filter log

- Kibana is a visualization tool, that helps display data from Elastic

3. Architecture of ELK stack

ELK is very flexible, it has some designs you can refer to below:

This is a standard flow of ELK slack. It is suited for collecting data from IIS log, folder log, database, Syslog, Nginx log…

In fact, we have some projects that are very big and have many things needed to log, not only exception types. We can log info, warnings, activities, flow history or behavior of users. In this case, maybe we need a buffer to help increase the performance of the system, you can see the flow below:

You can see in the above image, we can buffer performance by adding more Buffering layers between Beats & Logstash. You can use message queues like Redis, Kafka or RabbitMQ to make a Buffering layer.

I have joined a product website, it has more than 5 million users per month. I also used ELK stack to help collect logs but I didn't use beats. In my project, I used Nlog and pushed logs directly into Kafka. I think this is also a good way for some projects.

In some small and not complicated projects, we can push data logs from the beats file directly into Elastic, no need to use Logstash. Logstash helps analyze, parse logs so if you are collecting logs from many sources and not the same format, you have to use Logstash.

4. Summary

In this article, I will use standard flow to help collect logs from websites deployed in the IIS server (Window OS), and that website has provided many log files. I will install Logstash, ES and Kibana on the Linux server and install file beats on the window OS (server publish a website).

Okay, let’s go to day 2!!

Day 2. Setup & configure ElasticSearch and Kibana on Linux Ubuntu

Step 1: Update & Install Dependencies

I am using Ubuntu version 20.04 and I will install ELK on it. You need to install Java 8 first because ELK stack requires it. To install Java 8, run the command:

sudo apt-get install openjdk-8-jdkIf you get an error “E: Unable to locate package openjdk-8-jdk” you need to update apt-get first. Run command sudo apt-get update and then install java 8 again.

Step 2: Install Elasticsearch on Ubuntu

1. Import the PGP key for Elastic

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -2. Install the apt-transport-https package

sudo apt-get install apt-transport-https3. Add the Elastic repository to your system’s repository:

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee –a /etc/apt/sources.list.d/elastic-7.x.list4. Update apt-get package before installing Elasticsearch

sudo apt-get update5. Install Elasticsearch by running the command below:

sudo apt-get install elasticsearch6. Configure Elasticsearch

- Run the command below to edit the config of Elasticsearch:

sudo vim /etc/elasticsearch/elasticsearch.ymlOpen comment 2 lines below (remove #):

network.host: 192.168.0.1

http.port: 9200You have to change 192.168.0.0.1 to localhost. If not, you can change to 0.0.0.0 to allow access from an outside server, don’t forget to open port 9200.

If your Elasticsearch does not support replication you can set more option discovery.type: single-node to help run in a single node (server).

7. Configure JVM heap size (optional)

By default, the JVM heap size is set at 1GB, you can reduce or increase it by editing the jvm.options file. Follow command:

sudo vim /etc/elasticsearch/jvm.options8. Start the Elasticsearch service

sudo systemctl start elasticsearch.service9. Enable Elasticsearch and run

sudo systemctl enable elasticsearch.service10. Test and check version

To check if Elasticsearch is running or not, run the command:

sudo systemctl status elasticsearch.serviceAnd the result:

● elasticsearch.service - Elasticsearch

Loaded: loaded (/lib/systemd/system/elasticsearch.service; enabled; vendor preset: enabled)

Active: active (running) since Tue 2022-03-08 03:33:29 UTC; 4 days ago

Docs: https://www.elastic.co

Main PID: 6871 (java)

Tasks: 86 (limit: 19189)

Memory: 9.5G

CGroup: /system.slice/elasticsearch.service

├─6871 /usr/share/elasticsearch/jdk/bin/java -Xshare:auto -Des.networkaddress.cache.ttl=60 -Des.netwo>

└─7068 /usr/share/elasticsearch/modules/x-pack-ml/platform/linux-x86_64/bin/controller

Mar 08 03:33:10 instance-2 systemd[1]: Starting Elasticsearch...

Mar 08 03:33:29 instance-2 systemd[1]: Started Elasticsearch.To check the version of Elasticsearch, run the command:

curl -X GET "localhost:9200"And the result :

{

"name" : "instance-2",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "yUrDaXARQmWT4VViwuHWUQ",

"version" : {

"number" : "7.17.1",

"build_flavor" : "default",

"build_type" : "deb",

"build_hash" : "e5acb99f822233d62d6444ce45a4543dc1c8059a",

"build_date" : "2022-02-23T22:20:54.153567231Z",

"build_snapshot" : false,

"lucene_version" : "8.11.1",

"minimum_wire_compatibility_version" : "6.8.0",

"minimum_index_compatibility_version" : "6.0.0-beta1"

},

"tagline" : "You Know, for Search"

}DONE install ES!!!

Step 3: Install & run Kibana on Ubuntu

1. Install Kibana, run command

sudo apt-get install kibana2. Configure Kibana by change kibana.yml file

sudo vim /etc/kibana/kibana.ymlDelete the # sign at the beginning of the lines below:

server.port: 5601

server.host: "your-hostname"

elasticsearch.hosts: ["http://localhost:9200"]Change “your-hostname” to “0.0.0.0” to help access from the outside server. Don’t forget you need to open the publish port 5601.

If you use UFW firewall you can run the command sudo ufw allow 5601/tcp to publish that port for you.

3. Start Kibana service

sudo systemctl start kibana4. Enable Kinana to run at boot

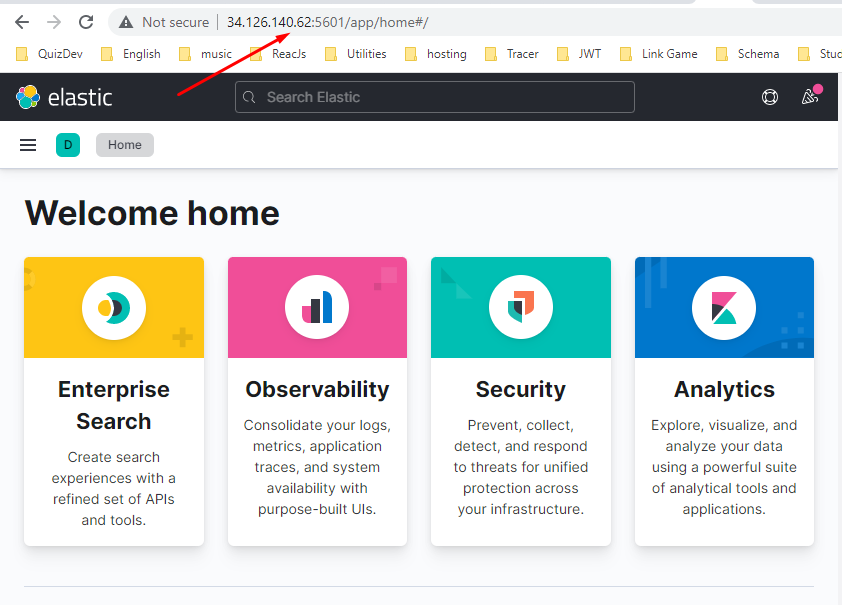

sudo systemctl enable kibanaAnd then, you can access http:[your-ip]:5601 to check the Kibana website.

Day 3. Setup & configure LogStash on Linux Ubuntu

Step 1: Install Logstash on Ubuntu

1. Install Logstash, run command:

sudo apt-get install logstash2. Start the Logstash service:

sudo systemctl start logstash3. Enable the Logstash service:

sudo systemctl enable logstash4. Check the status of the service, run command:

sudo systemctl status logstashIf you see the same result below, Logstash is running on your server:

logstash.service - logstash

Loaded: loaded (/etc/systemd/system/logstash.service; enabled; vendor preset: enabled)

Active: active (running) since Sat 2022-03-12 08:26:58 UTC; 8s ago

Main PID: 13630 (java)

Tasks: 18 (limit: 4915)

CGroup: /system.slice/logstash.service

└─13630 /usr/share/logstash/jdk/bin/java -Xms1g -Xmx1g -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupanc

Mar 12 08:26:58 instance-3 systemd[1]: Started logstash.

Mar 12 08:26:58 instance-3 logstash[13630]: Using bundled JDK: /usr/share/logstash/jdkStep 2: Configure Logstash

The structure of a config file in Logstash includes three parts. They are input, filter and output, you can see the image below:

In that:

+ Input: listening data from UDP, TCP, beats file, database or log file path….

+ Filter: Parse many log types to the same format.

+ Output: Transfer data after filter to Elasticsearch.

In Logstash each pipeline is a config file, we can create many pipelines to help collect data from many sources.

For example, I will create first-pineline.conf file that helps me collects data from beats with an IIS log. And I will create this file inside the folder “/usr/share/logstash”.

input {

beats {

port => 5044

}

}

filter {

# check that fields match your IIS log settings

grok {

match => ["message", "%{TIMESTAMP_ISO8601:timestamp} %{IPORHOST:site} %{WORD:method} %{URIPATH:page

} %{NOTSPACE:querystring} %{NUMBER:port} %{NOTSPACE:username} %{IPORHOST:clienthost} %{NOTSPACE:useragent} (%{URI:r

eferer})? %{NUMBER:response} %{NUMBER:subresponse} %{NUMBER:scstatus} %{NUMBER:time_taken}"]

}

# set the event timestamp from the log

# https://www.elastic.co/guide/en/logstash/current/plugins-filters-date.html

date {

match => [ "timestamp", "YYYY-MM-dd HH:mm:ss" ]

timezone => "Etc/UCT"

}

# matches the big, long nasty useragent string to the actual browser name, version, etc

# https://www.elastic.co/guide/en/logstash/current/plugins-filters-useragent.html

useragent {

source=> "useragent"

prefix=> "browser_"

}

}

# output logs to console and to elasticsearch

output {

stdout { codec => rubydebug }

elasticsearch {

hosts => ["34.126.140.62:9200"]

index => "iis-log-collection"

}

}You can see in the input part, Logstash will listen from the beats file with port 5044, this post will auto-open when you run the Logstash service. To run Logstash config and instance you can see

In the output path, I’m trying to transfer data into Elasticsearch with index “iis-log-collection”. This index will auto-create when any data is sent to port 5044.

To learn more about Logstash configuration syntax, you can read more here.

To run this config file we can run in two ways:

- Way 1: Running Logstash from the Command-Line.

- Way 2: Running Logstash as a service

For the purpose of testing so I will try to use way 1 to run first-pineline.conf file by using the command:

bin/logstash -f first-pipeline.conf --config.reload.automatic--config.reload.automatic : this means when you update first-pineline.conf file, you no need to restart the LogStash service.

And you will see the result as below:

And now Logstash is ready to listen to logs from the beats file in port 5044. But this is only the terminal running, so that port will auto close when the session is ending. You need to run Logstash as a service.

We will setup Filebeat in the next step below.

Day 4. Setup & configure Filebeat on Window

To download FileBeat from the link below:

https://www.elastic.co/downloads/beats/filebeat

In the Choose platform combobox, select the Window ZIP x86_64 option. And then unzip.

Filebeat supports many many modules to help collect data log, in that it also supports collect log in IIS server, its name is IIS module. But in this example, I want to collect logs from some folders, so I will not install and use the IIS module.

Open filebeat.yml in the folder you just unzipped. And edit it as below:

You can see, Filebeat has two parts: input & output.

Input: I set the log IIS folder that I need to collect.

Output: Set link Kibana and Logstash. You can see the configuration of link Logstash with port 5044 and data will transfer to this port.

Next step, open Powershell and run as Administrator, point to the folder containing the filebeat.yml file and run the command below to install service power

powershell.exe -ExecutionPolicy UnRestricted -File .\install-service-filebeat.ps1And run command

Start-Service filebeatTo start the Filebeat service on your window.

And now you can try the run command below to see Filebeat collect data and push to Logstash:

sudo ./filebeat -e -c filebeat.ymlYou will see Filebeat sent the data log to Logstash as below:

And now you can access Kibana and try to create an Index Pattern as in the image below:

And then you can access the Discover page to see the result.

Reference documents

- https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-installation-configuration.html

- https://www.elastic.co/guide/en/beats/filebeat/current/setting-up-and-running.html

- https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-modules.html

- https://www.elastic.co/guide/en/logstash/7.0/config-examples.html

- https://www.elastic.co/guide/en/logstash/current/netflow-module.html

- https://github.com/newrelic/logstash-examples

List questions & answers

- 1. What is Elk stack?ELK Stack is the leading open-source IT log management solution for companies who want the benefits of a centralized logging solution without the enterprise software price. Elasticsearch, Logstash, and Kibana when used together, form an end-to-end stack (ELK Stack) and real-time data analytics tool that provides actionable insights from almost any type of structured and unstructured data source.

- 2. What is the ELK stack used for?

- 3. Can Filebeat send directly to Elasticsearch?

- 4. Can Filebeat have multiple outputs?

COMMENT